TechNews

Latest updates and insights on tech.

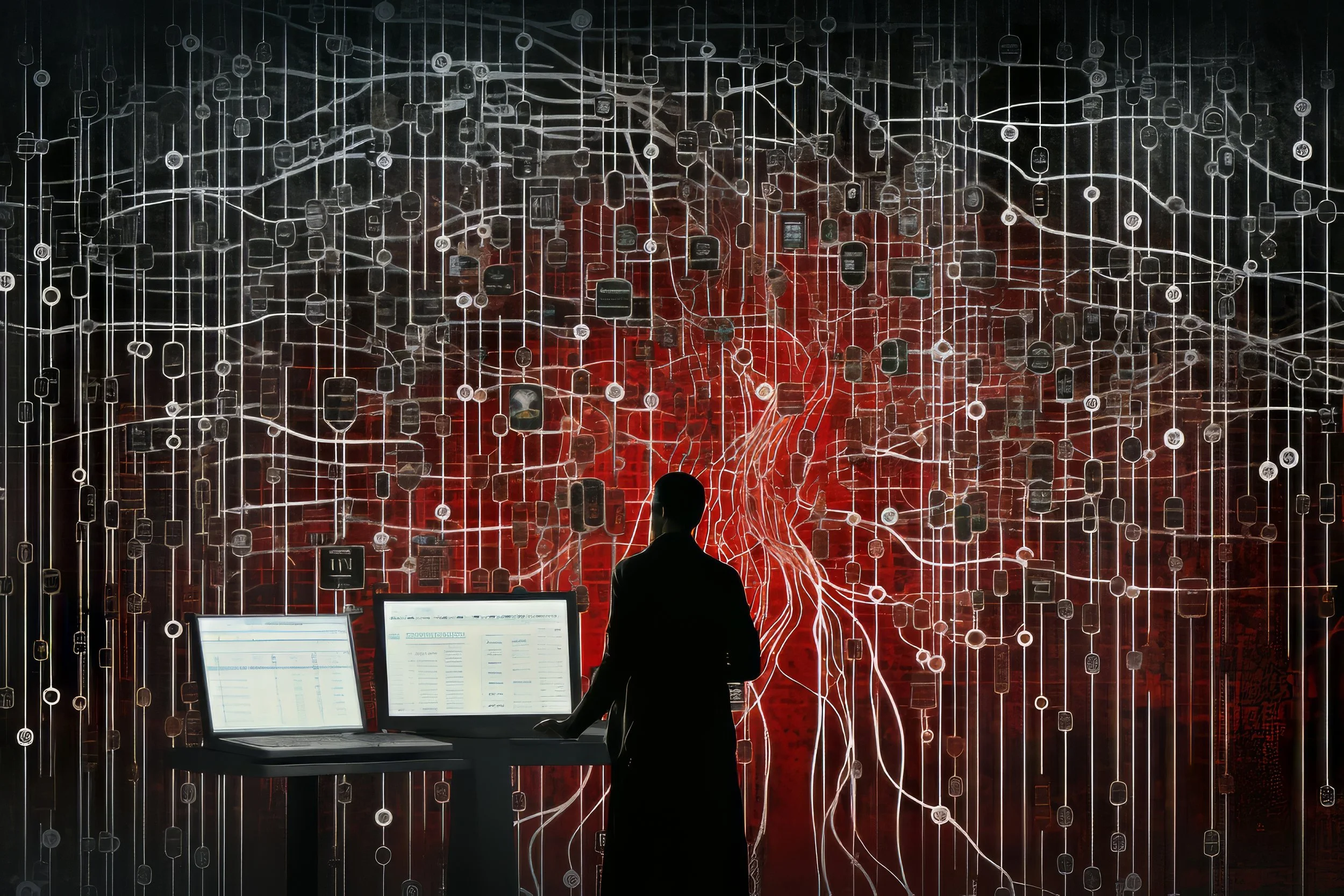

Exploring Neural Network Complexity

From basic perceptrons to advanced deep learning models, neural network architectures continue to evolve in sophistication and capability. CNNs excel in image processing, RNNs handle sequential data, while transformer architectures are redefining natural language understanding. As research pushes boundaries in areas like neuromorphic computing and explainable AI, these systems are finding novel applications across healthcare, finance, and autonomous systems.